HashMap简介

HashMap是Java程序员使用频率最高的用于映射(键值对)处理的数据类型。HashMap的博客多是关于JDK1.6的。在JDK1.6中,HashMap采用桶位+链表实现,即使用链表处理hash冲突,同一hash值的元素都存储在一个链表里。但是当位于一个桶中的元素较多,即hash值相等的元素较多时,通过key值依次查找的效率较低。而JDK1.8中,HashMap采用位桶+链表+红黑树实现,当链表长度超过阈值(8)时,将链表转换为红黑树,这样大大减少了查找时间。

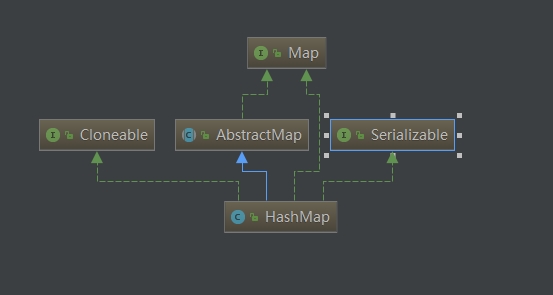

HashMap结构

1 2 public class HashMap <K,V> extends AbstractMap <K,V>implements Map <K,V>, Cloneable, Serializable

HashMap 是一个散列表,它存储的内容是键值对(key-value)映射。Map实现类实际存储数据的节点类继承自此接口;

成员变量 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 static final int DEFAULT_INITIAL_CAPACITY = 1 << 4 ;static final int MAXIMUM_CAPACITY = 1 << 30 ;static final float DEFAULT_LOAD_FACTOR = 0.75f ;static final int TREEIFY_THRESHOLD = 8 ;static final int UNTREEIFY_THRESHOLD = 6 ;static final int MIN_TREEIFY_CAPACITY = 64 ;transient Node<K,V>[] table;transient Set<Map.Entry<K,V>> entrySet;transient int size;transient int modCount;int threshold;final float loadFactor;

table是一个Entry类型数组,Entry类型用于存储具体的”key-value键值对”。size是HashMap的大小,它是HashMap保存的实际键值对的数量。

构造函数 1 2 3 public HashMap () { this .loadFactor = DEFAULT_LOAD_FACTOR; }

默认构造函数,初始化HashMap,并将填充因子设为0.75。 可以看出此时HashMap中的table并未在堆中分配地址。当首次进行put操作时才会进行table初始化

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 public HashMap (int initialCapacity, float loadFactor) { if (initialCapacity < 0 ) throw new IllegalArgumentException ("Illegal initial capacity: " + initialCapacity); if (initialCapacity > MAXIMUM_CAPACITY) initialCapacity = MAXIMUM_CAPACITY; if (loadFactor <= 0 || Float.isNaN(loadFactor)) throw new IllegalArgumentException ("Illegal load factor: " + loadFactor); this .loadFactor = loadFactor; this .threshold = tableSizeFor(initialCapacity); } public HashMap (int initialCapacity) { this (initialCapacity, DEFAULT_LOAD_FACTOR); }

传入临界值与填充因子初始化HashMap。

1 2 3 4 5 6 7 8 9 static final int tableSizeFor (int cap) { int n = cap - 1 ; n |= n >>> 1 ; n |= n >>> 2 ; n |= n >>> 4 ; n |= n >>> 8 ; n |= n >>> 16 ; return (n < 0 ) ? 1 : (n >= MAXIMUM_CAPACITY) ? MAXIMUM_CAPACITY : n + 1 ; }

tableSizeFor方法用于计算临界值。tableSizeFor(initialCapacity)返回大于等于initialCapacity的最小的二次幂数。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 public HashMap (Map<? extends K, ? extends V> m) { this .loadFactor = DEFAULT_LOAD_FACTOR; putMapEntries(m, false ); } final void putMapEntries (Map<? extends K, ? extends V> m, boolean evict) { int s = m.size(); if (s > 0 ) { if (table == null ) { float ft = ((float )s / loadFactor) + 1.0F ; int t = ((ft < (float )MAXIMUM_CAPACITY) ? (int )ft : MAXIMUM_CAPACITY); if (t > threshold) threshold = tableSizeFor(t); } else if (s > threshold) resize(); for (Map.Entry<? extends K , ? extends V > e : m.entrySet()) { K key = e.getKey(); V value = e.getValue(); putVal(hash(key), key, value, false , evict); } } }

重要方法 put() 和 putVal() 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 public V put (K key, V value) { return putVal(hash(key), key, value, false , true ); } static final int hash (Object key) { int h; return (key == null ) ? 0 : (h = key.hashCode()) ^ (h >>> 16 ); } final V putVal (int hash, K key, V value, boolean onlyIfAbsent, boolean evict) { Node<K,V>[] tab; Node<K,V> p; int n, i; if ((tab = table) == null || (n = tab.length) == 0 ) n = (tab = resize()).length; if ((p = tab[i = (n - 1 ) & hash]) == null ) tab[i] = newNode(hash, key, value, null ); else { Node<K,V> e; K k; if (p.hash == hash && ((k = p.key) == key || (key != null && key.equals(k)))) e = p; else if (p instanceof TreeNode) e = ((TreeNode<K,V>)p).putTreeVal(this , tab, hash, key, value); else { for (int binCount = 0 ; ; ++binCount) { if ((e = p.next) == null ) { p.next = newNode(hash, key, value, null ); if (binCount >= TREEIFY_THRESHOLD - 1 ) treeifyBin(tab, hash); break ; } if (e.hash == hash && ((k = e.key) == key || (key != null && key.equals(k)))) break ; p = e; } } if (e != null ) { V oldValue = e.value; if (!onlyIfAbsent || oldValue == null ) e.value = value; afterNodeAccess(e); return oldValue; } } ++modCount; if (++size > threshold) resize(); afterNodeInsertion(evict); return null ; }

putAll()、 putIfAbsent() 1 2 3 4 5 6 7 8 public void putAll(Map<? extends K, ? extends V> m) { putMapEntries(m, true); } // 如果指定的键未与某个值关联(或映射到null),则将其与给定值关联并返回null,否则返回当前已存在的值。 public V putIfAbsent(K key, V value) { return putVal(hash(key), key, value, true, true); }

putIfAbsent从putVal源码可知, 当onlyIfAbsent为true时, 如果key已经存在,且对应value不为null, 值不会进行覆盖。

resize() HashMap扩容方法

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 final Node<K,V>[] resize() { Node<K,V>[] oldTab = table; int oldCap = (oldTab == null ) ? 0 : oldTab.length; int oldThr = threshold; int newCap, newThr = 0 ; if (oldCap > 0 ) { if (oldCap >= MAXIMUM_CAPACITY) { threshold = Integer.MAX_VALUE; return oldTab; } else if ((newCap = oldCap << 1 ) < MAXIMUM_CAPACITY && oldCap >= DEFAULT_INITIAL_CAPACITY) newThr = oldThr << 1 ; } else if (oldThr > 0 ) newCap = oldThr; else { newCap = DEFAULT_INITIAL_CAPACITY; newThr = (int )(DEFAULT_LOAD_FACTOR * DEFAULT_INITIAL_CAPACITY); } if (newThr == 0 ) { float ft = (float )newCap * loadFactor; newThr = (newCap < MAXIMUM_CAPACITY && ft < (float )MAXIMUM_CAPACITY ? (int )ft : Integer.MAX_VALUE); } threshold = newThr; @SuppressWarnings({"rawtypes","unchecked"}) Node<K,V>[] newTab = (Node<K,V>[])new Node [newCap]; table = newTab; if (oldTab != null ) { for (int j = 0 ; j < oldCap; ++j) { Node<K,V> e; if ((e = oldTab[j]) != null ) { oldTab[j] = null ; if (e.next == null ) newTab[e.hash & (newCap - 1 )] = e; else if (e instanceof TreeNode) ((TreeNode<K,V>)e).split(this , newTab, j, oldCap); else { Node<K,V> loHead = null , loTail = null ; Node<K,V> hiHead = null , hiTail = null ; Node<K,V> next; do { next = e.next; if ((e.hash & oldCap) == 0 ) { if (loTail == null ) loHead = e; else loTail.next = e; loTail = e; } else { if (hiTail == null ) hiHead = e; else hiTail.next = e; hiTail = e; } } while ((e = next) != null ); if (loTail != null ) { loTail.next = null ; newTab[j] = loHead; } if (hiTail != null ) { hiTail.next = null ; newTab[j + oldCap] = hiHead; } } } } } return newTab; }

clear() 1 2 3 4 5 6 7 8 9 10 11 12 public void clear () { Node<K,V>[] tab; modCount++; if ((tab = table) != null && size > 0 ) { size = 0 ; for (int i = 0 ; i < tab.length; ++i) tab[i] = null ; } }

clear方法将数组各位置变为空,将实际存储数据数size变为0

containKey() 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 public boolean containsKey(Object key) { return getNode(hash(key), key) != null; } final Node<K,V> getNode(int hash, Object key) { Node<K,V>[] tab; Node<K,V> first, e; int n; K k; if ((tab = table) != null && (n = tab.length) > 0 && (first = tab[(n - 1) & hash]) != null) { if (first.hash == hash && // always check first node ((k = first.key) == key || (key != null && key.equals(k)))) return first; if ((e = first.next) != null) { if (first instanceof TreeNode) return ((TreeNode<K,V>)first).getTreeNode(hash, key); do { if (e.hash == hash && ((k = e.key) == key || (key != null && key.equals(k)))) return e; } while ((e = e.next) != null); } } return null; }

判断key是否存在,先根据key确定数组中的位置,再去遍历数组位置上存储的链表或树查找是否存在对应节点,不存在则返回false

containsValue() 1 2 3 4 5 6 7 8 9 10 11 12 13 public boolean containsValue(Object value) { Node<K,V>[] tab; V v; if ((tab = table) != null && size > 0) { for (int i = 0; i < tab.length; ++i) { for (Node<K,V> e = tab[i]; e != null; e = e.next) { if ((v = e.value) == value || (value != null && value.equals(v))) return true; } } } return false; }

从数组0下标处依次遍历其中节点,查找是否存在。(TreeNode继承自Node也有next节点)

foreach() 1 2 3 4 5 6 7 8 9 10 11 12 13 14 public void forEach (BiConsumer<? super K, ? super V> action) { Node<K,V>[] tab; if (action == null ) throw new NullPointerException (); if (size > 0 && (tab = table) != null ) { int mc = modCount; for (int i = 0 ; i < tab.length; ++i) { for (Node<K,V> e = tab[i]; e != null ; e = e.next) action.accept(e.key, e.value); } if (modCount != mc) throw new ConcurrentModificationException (); } }

Java8新添加的方法,配合函数接口、Lambda表达式对数据进行遍历。可以改变其内存储对象。用法示例:

1 2 3 4 5 6 7 Map<String, Object> c = new HashMap<>(); c.put("1", 1); c.put("2", 2); c.forEach((s, o) -> { System.out.println(s + o); });

get()和getOrDefault() 1 2 3 4 5 6 7 8 9 10 11 12 //key不存在时返回null public V get(Object key) { Node<K,V> e; //getNode 根据key确定数组中的位置,再去遍历数组位置上存储的链表或树查找对应节点 return (e = getNode(hash(key), key)) == null ? null : e.value; } //key不存在时返回defaultValue public V getOrDefault(Object key, V defaultValue) { Node<K,V> e; return (e = getNode(hash(key), key)) == null ? defaultValue : e.value; }

isEmpty() 1 2 3 4 // 根据实际存储数据数判断是否为空 public boolean isEmpty() { return size == 0; }

remove() 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 public V remove (Object key) { Node<K,V> e; return (e = removeNode(hash(key), key, null , false , true )) == null ? null : e.value; } public boolean remove (Object key, Object value) { return removeNode(hash(key), key, value, true , true ) != null ; } final Node<K,V> removeNode (int hash, Object key, Object value, boolean matchValue, boolean movable) { Node<K,V>[] tab; Node<K,V> p; int n, index; if ((tab = table) != null && (n = tab.length) > 0 && (p = tab[index = (n - 1 ) & hash]) != null ) { Node<K,V> node = null , e; K k; V v; if (p.hash == hash && ((k = p.key) == key || (key != null && key.equals(k)))) node = p; else if ((e = p.next) != null ) { if (p instanceof TreeNode) node = ((TreeNode<K,V>)p).getTreeNode(hash, key); else { do { if (e.hash == hash && ((k = e.key) == key || (key != null && key.equals(k)))) { node = e; break ; } p = e; } while ((e = e.next) != null ); } } if (node != null && (!matchValue || (v = node.value) == value || (value != null && value.equals(v)))) { if (node instanceof TreeNode) ((TreeNode<K,V>)node).removeTreeNode(this , tab, movable); else if (node == p) tab[index] = node.next; else p.next = node.next; ++modCount; --size; afterNodeRemoval(node); return node; } } return null ; }

replace() 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 public V replace (K key, V value) { Node<K,V> e; if ((e = getNode(hash(key), key)) != null ) { V oldValue = e.value; e.value = value; afterNodeAccess(e); return oldValue; } return null ; } public boolean replace (K key, V oldValue, V newValue) { Node<K,V> e; V v; if ((e = getNode(hash(key), key)) != null && ((v = e.value) == oldValue || (v != null && v.equals(oldValue)))) { e.value = newValue; afterNodeAccess(e); return true ; } return false ; } public void replaceAll (BiFunction<? super K, ? super V, ? extends V> function) { Node<K,V>[] tab; if (function == null ) throw new NullPointerException (); if (size > 0 && (tab = table) != null ) { int mc = modCount; for (int i = 0 ; i < tab.length; ++i) { for (Node<K,V> e = tab[i]; e != null ; e = e.next) { e.value = function.apply(e.key, e.value); } } if (modCount != mc) throw new ConcurrentModificationException (); } }

replaceAll结合函数接口, 将每个节点的值转换为自定义方法的结果。 自定义方法以key, value参数,值为value类型

repaceAll使用实例:

1 2 3 4 5 6 7 8 Map<String, Object> c = new HashMap <>(); c.put("1" , 1 ); c.put("2" , 2 ); c.put("3" , 3 ); c.replaceAll((s, o) -> s + o); System.out.println(c);

类中相关hash计算方法 key的hash计算 1 2 3 4 static final int hash(Object key) { int h; return (key == null) ? 0 : (h = key.hashCode()) ^ (h >>> 16); }

key.hashCode() ^ (key.hashCode >>> 16)

这行代码叫做”扰动函数”。具体作用就是保证hash()方法返回值的二进制表示的低位的随机性,尽量减少冲突。JDK 源码中 HashMap 的 hash 方法原理是什么? 。hash方法可以看出,HashMap中允许key为null。当key为null时会将元素存储在数组0下标处

存储位置计算 根据key计算存储的数组下标

n为数组长

扩容时计算 1 e.hash & oldCap//oldCap 原数组长

扩容时的计算机制,根据计算结果判断该节点是否移动至新数组下标处,与存储时的位置计算相配合。

HashMap中的迭代器 Values 与 values() 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 final class Values extends AbstractCollection <V> { public final int size () { return size; } public final void clear () { HashMap.this .clear(); } public final Iterator<V> iterator () { return new ValueIterator (); } public final boolean contains (Object o) { return containsValue(o); } public final Spliterator<V> spliterator () { return new ValueSpliterator <>(HashMap.this , 0 , -1 , 0 , 0 ); } public final void forEach (Consumer<? super V> action) { Node<K,V>[] tab; if (action == null ) throw new NullPointerException (); if (size > 0 && (tab = table) != null ) { int mc = modCount; for (int i = 0 ; i < tab.length; ++i) { for (Node<K,V> e = tab[i]; e != null ; e = e.next) action.accept(e.value); } if (modCount != mc) throw new ConcurrentModificationException (); } } } public Collection<V> values () { Collection<V> vs = values; if (vs == null ) { vs = new Values (); values = vs; } return vs; }

KeySet 与 keySet() 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 public Set<K> keySet () { Set<K> ks = keySet; if (ks == null ) { ks = new KeySet (); keySet = ks; } return ks; } final class KeySet extends AbstractSet <K> { public final int size () { return size; } public final void clear () { HashMap.this .clear(); } public final Iterator<K> iterator () { return new KeyIterator (); } public final boolean contains (Object o) { return containsKey(o); } public final boolean remove (Object key) { return removeNode(hash(key), key, null , false , true ) != null ; } public final Spliterator<K> spliterator () { return new KeySpliterator <>(HashMap.this , 0 , -1 , 0 , 0 ); } public final void forEach (Consumer<? super K> action) { Node<K,V>[] tab; if (action == null ) throw new NullPointerException (); if (size > 0 && (tab = table) != null ) { int mc = modCount; for (int i = 0 ; i < tab.length; ++i) { for (Node<K,V> e = tab[i]; e != null ; e = e.next) action.accept(e.key); } if (modCount != mc) throw new ConcurrentModificationException (); } } }

EntrySet 和 entrySet() 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 public Set<Map.Entry<K,V>> entrySet() { Set<Map.Entry<K,V>> es; return (es = entrySet) == null ? (entrySet = new EntrySet ()) : es; } final class EntrySet extends AbstractSet <Map.Entry<K,V>> { public final int size () { return size; } public final void clear () { HashMap.this .clear(); } public final Iterator<Map.Entry<K,V>> iterator() { return new EntryIterator (); } public final boolean contains (Object o) { if (!(o instanceof Map.Entry)) return false ; Map.Entry<?,?> e = (Map.Entry<?,?>) o; Object key = e.getKey(); Node<K,V> candidate = getNode(hash(key), key); return candidate != null && candidate.equals(e); } public final boolean remove (Object o) { if (o instanceof Map.Entry) { Map.Entry<?,?> e = (Map.Entry<?,?>) o; Object key = e.getKey(); Object value = e.getValue(); return removeNode(hash(key), key, value, true , true ) != null ; } return false ; } public final Spliterator<Map.Entry<K,V>> spliterator() { return new EntrySpliterator <>(HashMap.this , 0 , -1 , 0 , 0 ); } public final void forEach (Consumer<? super Map.Entry<K,V>> action) { Node<K,V>[] tab; if (action == null ) throw new NullPointerException (); if (size > 0 && (tab = table) != null ) { int mc = modCount; for (int i = 0 ; i < tab.length; ++i) { for (Node<K,V> e = tab[i]; e != null ; e = e.next) action.accept(e); } if (modCount != mc) throw new ConcurrentModificationException (); } } }

Interator 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 abstract class HashIterator { Node<K,V> next; // next entry to return Node<K,V> current; // current entry int expectedModCount; // for fast-fail int index; // current slot HashIterator() { // fail-fast 机制, 在iterator遍历过程中不能对hashMap有结构性变化 expectedModCount = modCount; Node<K,V>[] t = table; current = next = null; index = 0; if (t != null && size > 0) { // advance to first entry // iterator 初始化时, t[index++] == null只运行一次,即next为t[0]的头节点 do {} while (index < t.length && (next = t[index++]) == null); } } public final boolean hasNext() { return next != null; } final Node<K,V> nextNode() { Node<K,V>[] t; Node<K,V> e = next; if (modCount != expectedModCount) throw new ConcurrentModificationException(); if (e == null) throw new NoSuchElementException(); if ((next = (current = e).next) == null && (t = table) != null) { do {} while (index < t.length && (next = t[index++]) == null); } return e; } public final void remove() { Node<K,V> p = current; if (p == null) throw new IllegalStateException(); if (modCount != expectedModCount) throw new ConcurrentModificationException(); current = null; K key = p.key; removeNode(hash(key), key, null, false, false); expectedModCount = modCount; } } final class KeyIterator extends HashIterator implements Iterator<K> { public final K next() { return nextNode().key; } } final class ValueIterator extends HashIterator implements Iterator<V> { public final V next() { return nextNode().value; } } final class EntryIterator extends HashIterator implements Iterator<Map.Entry<K,V>> { public final Map.Entry<K,V> next() { return nextNode(); } }

expectedModCount = modCount fail-fast 机制,限制了在iterator创建后到遍历结束的过程中不能对hashMap有结构性变化。 示例如下:

1 2 3 4 5 6 7 8 9 10 11 12 Map<String, Student> c = new HashMap <>(); Student s1 = new Student ("1" );System.out.println(s1.equals(s1)); c.put("1" , s1); c.put("2" , s1); Set<Map.Entry<String, Student>> entries = c.entrySet(); Iterator iterator = entries.iterator();c.put("6" , s1); while (iterator.hasNext()) { System.out.println(iterator.next()); }

未完待续 这次看源码对涉及到红黑树的部分进行了跳过,留待日后对红黑树进行更深入了解后再更新。